Face Swapping Explained: How It Works + Safe Use

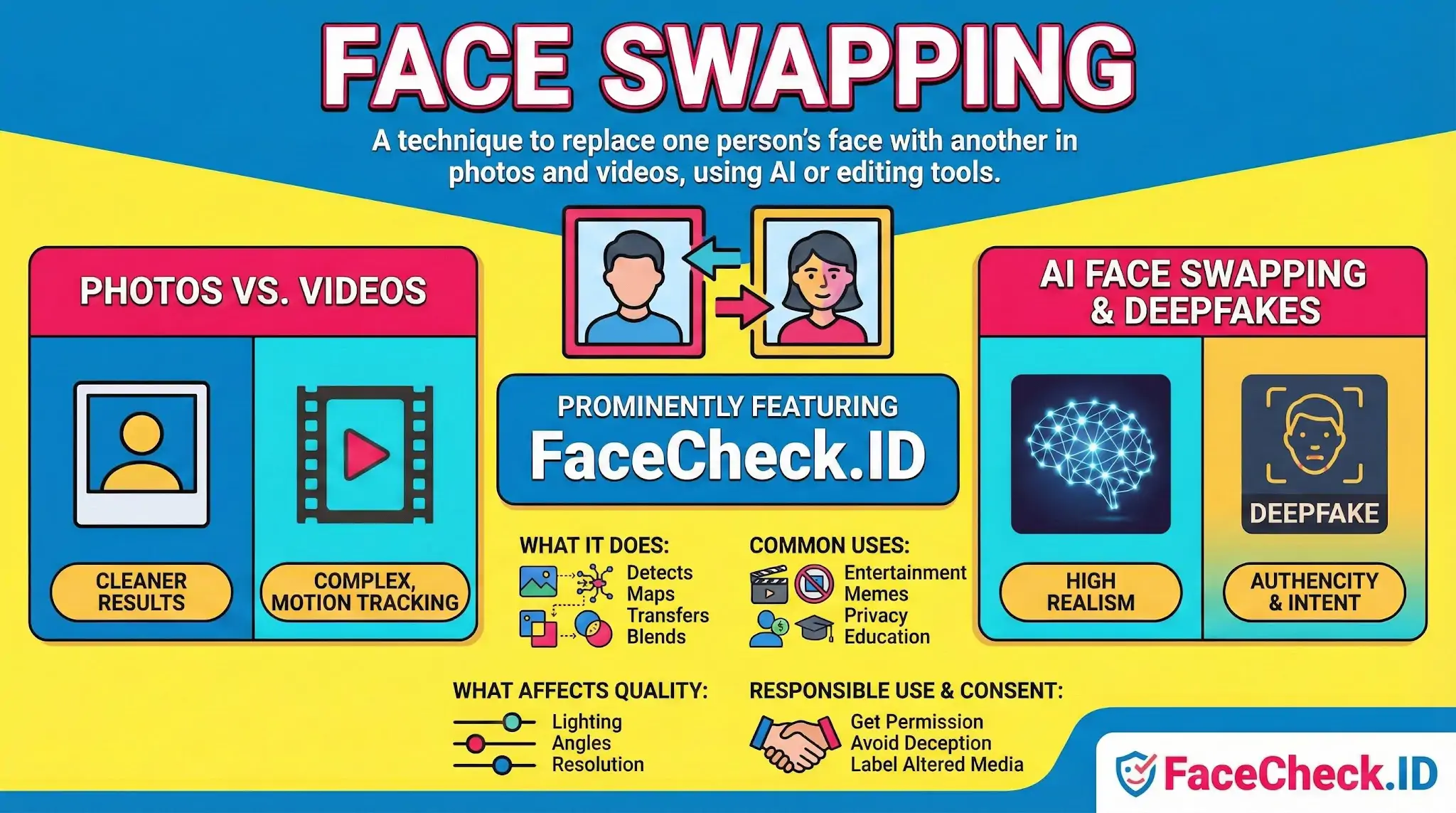

Face swapping is a photo and video editing technique that replaces one person’s face with another face while keeping the original head position, facial expression, lighting, and scene. It is commonly done with AI face swap apps, deep learning models, or standard editing tools to create realistic or playful results.

What face swapping does

Face swapping typically:

- Detects faces in an image or video

- Maps key facial landmarks like eyes, nose, mouth, jawline

- Transfers the source face onto the target face

- Blends skin tone, shadows, and edges to match the target scene

- Optionally adjusts color, sharpness, and texture for realism

Face swapping in photos vs videos

Photo face swapping focuses on one or more static images. It usually produces cleaner results because there is no motion to track.

Video face swapping must track a face across frames while preserving expressions and head movement. This is more complex and often needs stronger AI models to avoid flicker, misalignment, or unnatural blending.

Common uses

- Entertainment and memes

- Creative content, parody, and social media edits

- Movie and marketing mockups

- Gaming and character edits

- Privacy edits, such as replacing faces in published visuals with consent

- Historical or educational visualizations when clearly labeled

AI face swapping and deepfakes

Modern face swapping often uses AI. When the result is highly realistic and used to mimic a real person, it can overlap with deepfakes. The key difference is intent and realism: face swapping can be simple and obvious, while deepfakes are usually designed to look authentic.

What affects face swap quality

Better results come from:

- Clear, front facing faces with good lighting

- Similar angles between the source and target faces

- High resolution images

- Minimal obstructions like hair covering eyes, masks, or heavy motion blur

- Matching skin tone and color grading

Common issues include warped features, mismatched lighting, blurry edges, and unnatural skin texture.

Responsible use and consent

Face swapping should be done ethically:

- Get permission from the person whose face is used

- Avoid deceptive or harmful content

- Clearly label altered media when it could mislead

- Follow platform rules and local laws related to identity, privacy, and impersonation

FAQ

How does face swapping differ from deepfakes, and why does the difference matter for face recognition search?

Face swapping typically replaces one person’s face onto another person’s head/body within an image (often keeping the target’s pose, background, and context). “Deepfakes” is a broader umbrella that can include full synthetic video, lip-sync, reenactment, or entirely generated faces. The distinction matters because face recognition search engines rely heavily on facial geometry and texture; a simple swap can produce an embedding that partially matches the swapped-in identity while the surrounding context still points to the original source—creating misleading investigative leads.

What result patterns can suggest an image was face-swapped when you run a face recognition search?

Common patterns include: (1) top matches span two very different “clusters” of identities (e.g., some results resemble Person A while others resemble Person B); (2) the same background/photo-session appears across results but with different faces; (3) strong facial similarity but inconsistent non-face cues (ears, hairline, neck, tattoos, age, or lighting direction); and (4) multiple sites hosting the same picture with noticeably different face details. Treat these as manipulation signals, not proof.

How can a face swap create “mixed identity trails” across the web, and how should you interpret them?

When a swapped image spreads, reposts may attach the swapped face to captions, usernames, or biographies belonging to someone else, creating a trail where the face seems linked to unrelated identities. In a face recognition search engine, this can look like a person “has multiple names” or “multiple profiles.” The safer interpretation is: the results are leads about where similar face pixels appear—not a reliable statement about who the person is—until you validate original sources, posting timelines, and consistent corroborating details.

What is the safest workflow to investigate suspected face-swapped content using face recognition search engines?

Use a multi-step approach: (1) extract several frames/crops (full face, left/right half-face, and a wider crop including hairline/ears); (2) run searches with each crop and compare whether the same identity cluster persists; (3) separately run a traditional reverse image search on the full image (to track the background/body/photo set); (4) prioritize earliest-known postings and higher-quality originals; and (5) only draw conclusions when face matches and non-face evidence align. If they diverge, assume manipulation is plausible.

How can FaceCheck.ID (or similar tools) be used to reduce confusion when face swapping is suspected?

FaceCheck.ID can add value by quickly surfacing where visually similar faces appear across many sites, helping you spot identity “clusters” and conflicting contexts. To reduce confusion, run multiple queries (different crops/frames) and compare whether the top results converge on one consistent person. If results split into two strong clusters or the same underlying image appears with different faces, treat the case as potentially face-swapped and avoid using any single hit as identification.

Recommended Posts Related to face swapping

-

How to Find and Remove Nude Deepfakes With FaceCheck.ID: A Step-by-Step Guide

Face-swapping apps (way too easy to access).

-

Find & Remove Deepfake Porn of Yourself: Updated 2025 Guide

Face swapping and "nudifying" tools are everywhere, sometimes sold on shady sites or shared in online communities.