Synthetic Media Explained: Benefits, Risks & Deepfakes

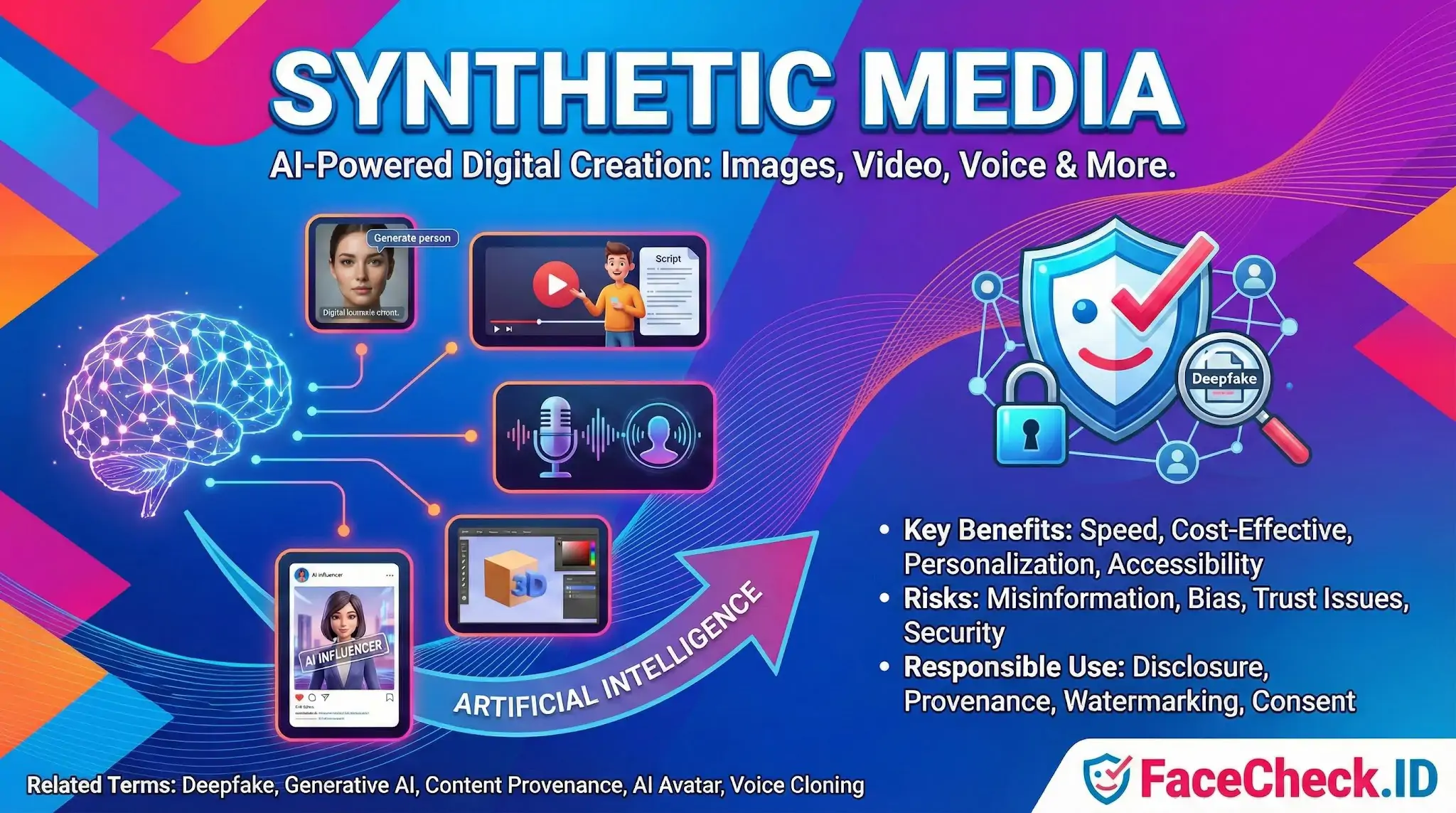

Synthetic media is digital content created or modified using artificial intelligence instead of being captured directly from the real world. It can include images, videos, audio, text, and 3D content generated from data and prompts, often designed to look or sound realistic.

Synthetic media is widely used for marketing, entertainment, education, product design, customer support, and accessibility. It is also closely connected to deepfakes and AI generated content, which is why authenticity and responsible use matter.

What counts as synthetic media

Common examples of synthetic media include:

- AI generated images created from text prompts or reference photos

- AI generated video such as animated scenes, AI avatars, and video created from scripts

- Synthetic voice including text to speech, voice cloning, and dubbing into new languages

- AI assisted photo and video edits like background replacement, object removal, face swapping, and upscaling

- Virtual influencers and digital humans used in ads, livestreams, and social content

- Generated music and sound effects used for games, podcasts, and videos

How synthetic media is created

Synthetic media is typically produced using machine learning models trained on large datasets. Popular approaches include:

- Generative adversarial networks used for realistic image and video generation

- Diffusion models commonly used for high quality image generation and editing

- Large language models used to generate scripts, captions, and other text content

- Neural text to speech systems used to synthesize natural sounding voices

Many tools combine these techniques so creators can produce multi modal content from a single prompt.

Key benefits

Synthetic media is popular because it can:

- Speed up content production by automating drafts, variations, and edits

- Reduce costs for shoots, voice talent, and post production

- Enable personalization such as localized ads or individualized training videos

- Support localization through dubbing, lip sync, and translated scripts

- Improve accessibility with narration, captions, and simplified visuals

Risks and challenges

Synthetic media can also introduce serious concerns:

- Misinformation and impersonation through deepfakes or fake audio

- Copyright and training data issues depending on the model and licensing

- Bias and harmful outputs if training data is skewed

- Loss of trust when audiences cannot verify what is real

- Security and fraud such as spoofed voice calls or fake identity content

Synthetic media vs deepfakes

Synthetic media is the broader category for AI generated or AI modified content.

Deepfakes are a specific type of synthetic media focused on realistic manipulation of a person’s face, voice, or identity, often for deception or parody.

Detection and responsible use

To use synthetic media responsibly, organizations often rely on:

- Disclosure that content is AI generated or AI assisted

- Content provenance such as cryptographic signing and authenticity metadata

- Watermarking visible or invisible markers added by tools or platforms

- Human review for sensitive use cases like politics, healthcare, and finance

- Consent and rights management for voices, faces, and copyrighted material

Where synthetic media is used

Synthetic media is commonly used in:

- Advertising and brand content for fast creative testing and variations

- Film, games, and animation for character creation and scene generation

- E learning and corporate training with AI presenters and localized narration

- Customer support through AI avatars and automated video explainers

- Ecommerce for product imagery, mockups, and virtual try on experiences

FAQ

What does “Synthetic Media” mean in the context of face recognition search engines?

Synthetic media refers to images, videos, or audio that are generated or significantly altered by AI (for example, GAN-generated faces, face swaps, or heavily AI-edited portraits). In face recognition search engines, synthetic media matters because it can create convincing “people” who don’t exist, or it can alter a real person’s appearance enough to change which matches appear.

How can synthetic (AI-generated) faces affect face recognition search results?

Synthetic faces can lead to confusing outcomes: (1) an AI-generated face may still match multiple real people “a little,” producing many weak look-alike hits; (2) an edited or face-swapped image of a real person may match the source person rather than the claimed identity; and (3) synthetic faces reused across sites can create the illusion of a consistent online footprint for a fake persona.

What are common signs that a face-search result might involve synthetic media rather than a real photo trail?

Common clues include: many matches with low-to-medium similarity but no strong “anchor” source; the same face appearing across unrelated accounts with different names/biographies; images that look overly airbrushed or uniform across lighting and skin texture; and profile photos that vary dramatically in style while the face stays oddly consistent. These are warning signs to verify carefully rather than conclude it’s the same person.

What should I do if I suspect an uploaded image is a deepfake or AI-edited portrait when using a face recognition search engine?

Use multiple inputs and validation steps: try a different frame/photo of the same person (preferably a natural, unfiltered image), crop tightly to the face, and compare top results for consistent non-face cues (tattoos, moles, ears, hairline, context, timestamps). Treat results as leads only, and avoid making accusations based solely on face similarity when synthetic manipulation is possible.

How does synthetic media change the way I should interpret results from tools like FaceCheck.ID?

If synthetic media is involved, treat FaceCheck.ID (or any similar tool) as a way to discover potential reuse and related appearances—not as proof of identity. Prioritize results that link to credible, original sources (not reposts), cross-check multiple photos of the same person, and assume higher false-match risk when images look AI-generated, face-swapped, or heavily filtered.

Recommended Posts Related to synthetic media

-

Find & Remove Deepfake Porn of Yourself: Updated 2025 Guide

If an ex-partner or someone you know is posting these fakes, you can report harassment or revenge porn; many places have laws that include synthetic media; give police your evidence.