Deepfake Detection Explained: Spot AI-Manipulated Media

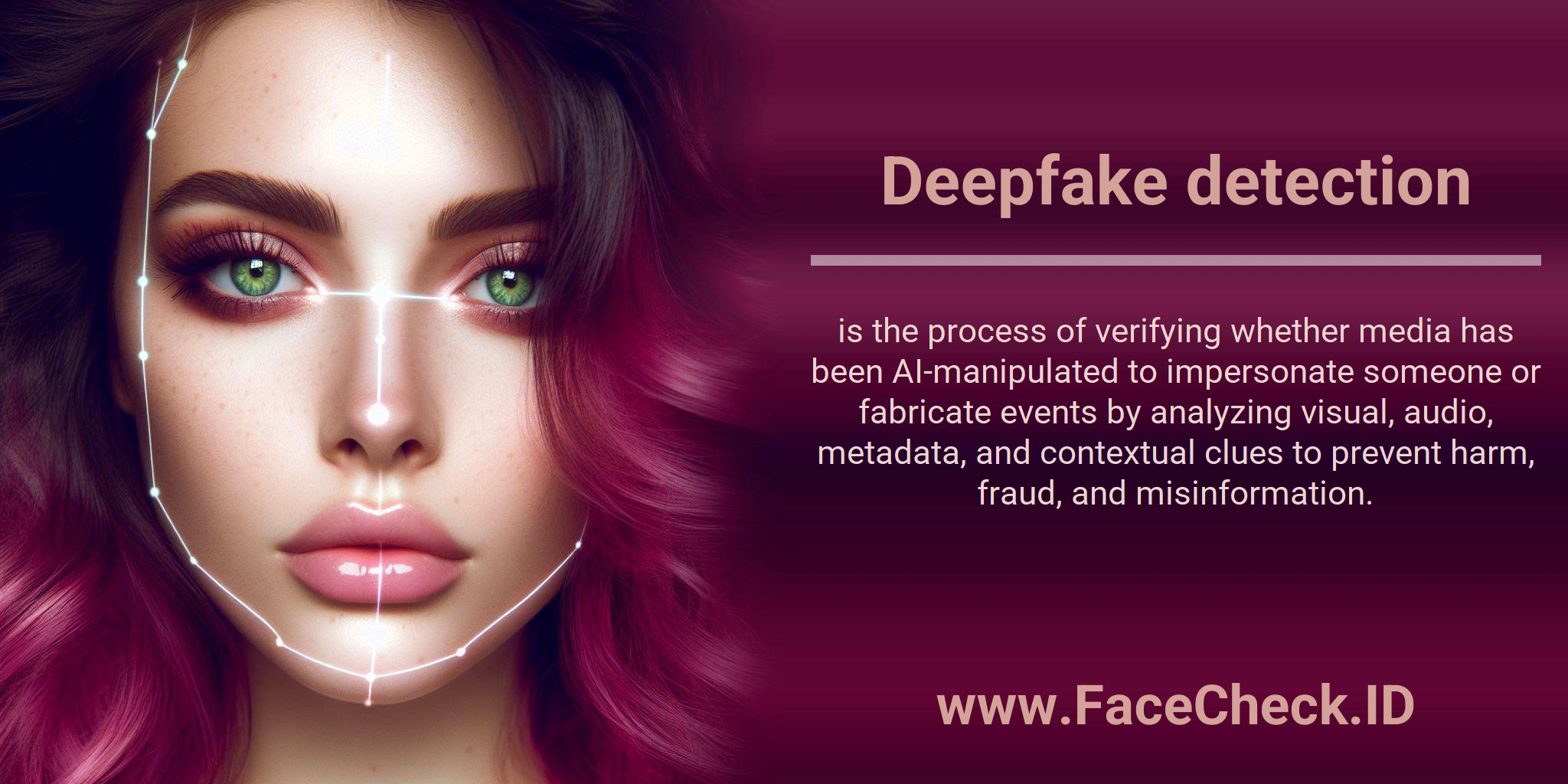

Deepfake detection is the process of identifying whether a video, image, audio clip, or text has been manipulated using artificial intelligence to impersonate a real person or fabricate events. The goal is to spot synthetic or altered media before it causes harm, spreads misinformation, or enables fraud.

Deepfakes are often created with generative AI models that can swap faces, clone voices, or generate realistic speech. Deepfake detection uses a mix of automated tools, forensic techniques, and human review to verify authenticity.

Why deepfake detection matters

Deepfakes can be convincing and fast to produce, which makes them a serious risk for:

- Online scams and fraud, such as CEO voice impersonation and fake customer support calls

- Misinformation and political manipulation, including fabricated speeches or events

- Reputation damage, like fake videos of public figures or employees

- Identity theft, where someone’s face or voice is used without consent

- Content integrity, especially for newsrooms, courts, and brand communications

What deepfake detection looks for

Detection methods typically focus on inconsistencies that are hard for AI to perfectly replicate, such as:

- Visual artifacts: unnatural skin texture, blurry edges, odd lighting, mismatched shadows, warped teeth, inconsistent reflections

- Motion and geometry issues: strange head turns, facial landmark drift, irregular blinking patterns, lip sync errors

- Audio anomalies: robotic tone, inconsistent breath sounds, unnatural pauses, spectral patterns common in voice cloning

- Metadata and provenance signals: missing camera data, unusual edit history, mismatched timestamps, broken compression patterns

- Context mismatches: background noise that does not fit the setting, incorrect dialect, wrong clothing for the event, implausible claims

Common deepfake detection techniques

Deepfake detection is usually done with a layered approach:

Machine learning classifiers

AI models are trained on real and manipulated media to recognize patterns typical of deepfakes. Modern systems may analyze frames, facial landmarks, frequency domains, and audio spectrograms.

Digital forensics

Forensic checks inspect compression artifacts, file structure, editing traces, and pixel level inconsistencies. These methods can help determine whether content was edited and sometimes how.

Liveness and challenge tests

Used in identity verification, these checks ask a user to perform actions in real time, like turning their head or reading a prompt. This helps block replay attacks and pre generated fakes.

Watermarking and content provenance

Some tools embed tamper resistant signals at creation time or attach signed metadata to prove origin and editing history. Provenance works best when it is used before content spreads.

Human review and verification

Experts and trained moderators review high risk content, cross check sources, and validate context. Human review is important when automated confidence is low.

Deepfake detection vs deepfake prevention

- Deepfake detection finds manipulated content after it exists or while it is being uploaded or shared.

- Deepfake prevention reduces the chance of successful impersonation, for example with stronger identity verification, watermarking at generation time, and secure media workflows.

In practice, organizations combine both.

Where deepfake detection is used

- Social media platforms and content moderation pipelines

- Banking, fintech, and payment verification

- Customer support and call center fraud prevention

- Hiring and remote onboarding identity checks

- Journalism, fact checking, and media verification

- Legal investigations and eDiscovery

- Brand protection and executive security

Limitations and challenges

Deepfake detection is not perfect because deepfake generation improves quickly. Common challenges include:

- Arms race dynamics: new generators reduce obvious artifacts

- Low quality or compressed media: detection signals can be destroyed by re uploads

- Partial manipulations: only the voice or only the face is altered

- False positives and false negatives: legitimate content may be flagged, and sophisticated fakes may pass

- Adversarial tactics: attackers may intentionally distort media to evade detectors

Key takeaway

Deepfake detection helps confirm whether digital media is authentic by analyzing visual, audio, metadata, and contextual cues. It is a critical defense against impersonation, misinformation, and identity based attacks in an AI driven media landscape.

FAQ

What is “Deepfake Detection” in the context of face recognition search engines?

Deepfake Detection is the set of techniques used to assess whether a face image (or a frame grabbed from video) is likely synthetic or manipulated (e.g., face-swap, AI-generated portrait, heavy retouching) before you treat face-search matches as trustworthy leads. In a face recognition search workflow, it helps reduce the risk of chasing results caused by a fake source image rather than a real person’s photo trail.

Why does deepfake content increase wrong-person matches in face recognition search results?

Deepfakes can blend identity cues from multiple people or introduce artifacts that change the face geometry and skin texture. A face search engine may still produce “similar face” matches because embeddings can remain close even when the image is manipulated, which can lead to look-alike results, mixed identities across sources, or matches that reflect the deepfake’s training/reference imagery rather than the claimed person.

If I’m investigating a suspected deepfake, what kind of image should I upload to a face recognition search engine?

Use the clearest, most neutral face you can obtain: a sharp, front-facing frame with minimal blur, minimal beauty filters, and good lighting. Avoid frames with motion blur, extreme expressions, heavy compression, or strong stylization. If it’s from video, try multiple frames (neutral expression and different angles) and compare whether the results are consistent across frames—large inconsistencies can be a warning sign.

How can I sanity-check whether a face-search “hit” might be driven by a deepfake rather than a real photo trail?

Cross-check context, not just the face: (1) look for the earliest publication/source and whether it’s reputable, (2) compare multiple images from the same source page to see if the face stays consistent, (3) check if the same face appears under different names/usernames across unrelated sites, and (4) compare results from more than one input photo/frame. If a tool like FaceCheck.ID returns many high-similarity results tied to unrelated identities or inconsistent contexts, treat the matches as investigative leads only and prioritize corroboration.

Does deepfake detection “prove” an image is fake, and how should I use it alongside tools like FaceCheck.ID?

No—deepfake detection is usually probabilistic and can produce false positives (real images flagged) and false negatives (fakes missed), especially with low-quality or heavily compressed media. Use it to guide caution levels: if an image looks suspicious, run searches using alternative photos, validate matches using source credibility and independent corroboration, and avoid concluding identity from a single face-search result—even when using face-search tools such as FaceCheck.ID.

Recommended Posts Related to deepfake detection

-

How to Spot a Catfish in 2025: Red Flags in Fake Dating Profiles

AI deepfake detection: AI-powered tools can now scan profile images and video calls to detect manipulation. The future of safe online dating may rely on crowd reporting + blockchain verification + AI deepfake detection working together.

-

Yilong Ma: Elon Musk's Doppelgänger or a Deepfake Masterpiece?

Deepware's scanner uses advanced AI models to detect signs of digital manipulation in videos, making it a reliable source for deepfake detection. While Deepware's AI models are sophisticated, no deepfake detection system is infallible.

-

How to Spot a Catfish Online in Under 60 Seconds with FaceCheck.ID

AI/Deepfake Detection. How accurate is the AI/deepfake detection?

-

Find & Remove Deepfake Porn of Yourself: Updated 2025 Guide

Stay informed on new protections: laws and tech are evolving, so follow news about deepfake detection, watermarking, and new regulations; organizations listed above often announce new initiatives.